From ASICs to GPUs: Why the Transition from Mining to AI Is Harder Than You Think

Bitcoin miners are eyeing AI/HPC as the next frontier, but GPU infrastructure isn't mining with different hardware. See what the transition actually requires—and where mining experience helps or hurts.

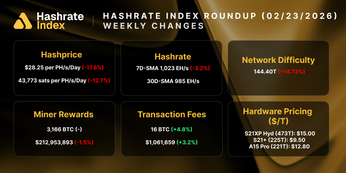

Hashprice reached an all-time low in November 2025 and again this week. Mining economics have seen some pendulum swings post-halving, and there’s evidence that things have shifted.

For companies seeking new revenue streams in the tech landscape, the next logical place to focus compute is on AI and high-performance computing (HPC). It’s interesting because AI and HPC are still in their infancy, yet there’s widespread speculation that they’re in a bubble. Bubble or not, it’s difficult to argue with the fact that AI/HPC has already significantly changed work and productivity.

Some major public miners have announced an AI/HPC strategy, but executing a transition is a different challenge. The gap between "we should diversify into AI/HPC" and "we've successfully deployed GPU infrastructure" is larger than most operators anticipate. Mining expertise is simultaneously on the list of your top 10 greatest assets and your top 10 greatest liabilities.

Why Miners Are Looking at AI/HPC

Aside from AI being a ubiquitous buzzword in every tech headline, every conference keynote, and every software’s latest changelog update, it is a serious innovation in a part of the tech industry that miners know well—compute.

Three Forces Creating This Moment

But beyond knowledge, there are three forces pushing miners toward AI/HPC simultaneously:

1. Economics

Hashprice compression post-halving has squeezed margins across the industry. Single-commodity exposure to Bitcoin price creates volatility that boards and investors increasingly want to hedge. Diversification isn't just strategic. For some operations, it's survival.

2. Capital Access

Miners pursuing AI/HPC are encountering debt terms they have never seen for ASIC financing (with Luxor’s Miner Financing being a key exception). Credit funds that wouldn't touch Bitcoin mining are eager to finance GPU infrastructure. Companies are raising billions rather than millions, and the cost of capital is significantly lower.

3. Power

The megawatts miners have already secured are the hardest piece of the AI/HPC puzzle for newcomers. Hyperscale data center capacity is sold out until 2028 or 2029. Your existing power contracts and utility relationships aren't just assets—they're your entry ticket.

What Does a Mining-to-AI Transition Look Like?

These forces are producing different transition strategies.

- Hybrid / “Mullet Mining”: Some miners are running hybrid operations, sometimes called “Mullet Mining”—AI business in the front, mining party in the back. Their key advantage is using mining's flexible load to balance the 100% uptime requirements of AI/HPC.

- Partnerships: Some miners are partnering with hyperscalers as colocation providers, essentially becoming power and space landlords.

- Diversification: Some miners are adding AI/HPC as a diversification layer while maintaining significant mining operations.

- Pivot: Some miners are pivoting entirely, exiting Bitcoin to reposition as AI infrastructure providers (though this is vulnerable to the same single-commodity exposure mentioned above).

The common thread: nearly every major public miner has announced something. Roughly one-third of AI workloads now run on neocloud infrastructure rather than hyperscalers, and that share is growing. The market is real.

But the window won't stay open indefinitely. Purpose-built AI/HPC facilities are coming online. The competitive advantage of repurposing mining infrastructure will compress as supply catches up to demand.

The Infrastructure Gap—GPUs Aren't T-Shirt Sizes

If you've spent years buying ASICs, GPU procurement will feel like a different planet.

Why Can't You Order GPUs Like ASICs?

Let’s start with an analogy: ASICs are like t-shirts. You pick a design and color you like in an XL, and it fits like almost every other XL t-shirt you’ve ever gotten. You can wear it around the house, at a concert, to a BBQ… It’s versatile, and it does what you need it to do. GPUs are like tailored suits. You buy a tailored suit because you have a specific need for it—not to wear around the house or a BBQ. You go to someone you trust because you know it takes time and can be deeply personal. And if you want to avoid some of the time and money that goes into the tailored suit, you can always try to order one “off the rack,” but it’s never going to fit the same as the tailored suit.

ASICs are commodities. You pick a model, order a quantity, and every unit is essentially identical. GPUs are bespoke. Every build is configured for a specific workload, and the configuration decisions are extensive. The industry uses the acronym SCORN—Storage, CPUs, Operating System, RAM, NICs—to describe the spec sheet for a single server. Every component matters, and every component needs to work together. There are no t-shirt sizes. You can't just order "a GPU server" the way you order an S19.

What Makes GPU Procurement So Complex?

GPU procurement complexity cascades into a coordination challenge that derails more transitions than any single technical problem. Hardware, colocation, and connectivity are a three-way dependency. Your GPUs arrive but your colo space isn't ready. Your colo is ready but fiber isn't lit. Your fiber is connected but your hardware is delayed by component shortages—RAM and SSD prices are currently rising 25–100% per week as major AI labs buy out inventory. Each piece depends on the others, and timelines slip in ways that mining operators aren't accustomed to. Typical colocation lead times run around nine months. Procurement timelines stretch six to eighteen months under ideal conditions. Labor constraints for data center construction add another variable.

And procurement is just the beginning. The operational complexity extends well beyond getting hardware racked.

What Happens After the Hardware Arrives?

Hardware management for GPUs isn't set-and-forget. Unlike ASICs—plug in, point at a pool, monitor hashrate—GPU servers require OS configuration, driver management, firmware updates, and ongoing compatibility management across every component in the stack. When something breaks or underperforms, diagnosis is more complex than swapping a hashboard.

Network management is infrastructure, not an afterthought. AI/HPC workloads demand more than an internet connection. Training clusters need high-bandwidth, low-latency interconnects between servers. Inference workloads need low-latency connections to end users. The networking architecture you design constrains what workloads you can serve.

Monetization requires a strategy. Mining monetization is automatic—hashrate flows to a pool, BTC flows back. GPU monetization requires a go-to-market approach. Are you selling capacity to hyperscalers? Neoclouds? Direct enterprise customers? Each has different sales cycles, contract structures, and margin profiles. You're not just operating infrastructure—you're operating a business with customers who have expectations, SLAs, and alternatives.

None of this is insurmountable. But miners who assume GPU infrastructure is "mining with different hardware" will underestimate the coordination, the operational overhead, and the time required to reach revenue.

Where Mining Experience Helps (and Where It Doesn't)

Mining experience can be valuable in the transition to AI/HPC, but it's selectively valuable, and knowing where it applies is critical.

Mining Experience That Applies to AI Data Center Infrastructure

What transfers well: power management at scale. Miners understand utility relationships, power contracts, demand response, and what it takes to keep megawatts flowing reliably. Your existing power assets took years to secure, and they're exactly what AI/HPC buildouts need. Site operations experience translates too—managing physical infrastructure, dealing with cooling and environmental controls, handling logistics and maintenance in remote or challenging locations. And miners understand high-density compute environments in ways that traditional enterprise IT teams often don't. You've managed heat, you've managed uptime, you've managed scale.

But some of that experience creates blind spots.

Where Will Mining Instincts Lead You Astray?

Miners are used to fast deployment timelines. ASICs arrive, you rack them, you point them at a pool, and you're hashing within days. GPU infrastructure doesn't work that way. The coordination complexity and lead times we covered earlier mean you're planning in quarters or years, not weeks. Assuming GPU deployment will match ASIC deployment speed is one of the most common mistakes.

Miners are used to standardized infrastructure. ASICs have straightforward power and cooling requirements that scale linearly. GPU servers—especially the latest generations—require cooling beyond what many mining facilities can handle without significant retrofits. Liquid cooling is increasingly standard, particularly for edge deployments. Your existing airflow design may not translate.

Miners are used to minimal compliance overhead. Export controls apply to GPUs in ways they don't to ASICs. Advanced chips have restrictions on where they can be shipped and to whom. If you're operating internationally or selling to certain customer segments, compliance becomes a real operational consideration—not just paperwork.

Energy and Compute: Why Miners Have an Edge

The good news: the market is moving toward miners. Energy and compute are becoming integrated concepts in mainstream AI infrastructure. Most data center operators are looking to add energy to the grid or generate their own power to support AI workloads. This is territory miners already understand. The PTC conference earlier this year emphasized that energy sourcing has become a critical part of data center planning from the earliest stages—an area where mining operations have years of operational knowledge.

The key is knowing which instincts to trust and which to override.

The Market Is Moving—Training Now, Inference Next

Understanding the AI infrastructure market requires understanding what that infrastructure actually does. Every AI workload falls into one of two categories: training or inference.

Training vs Inference: What's the Difference?

Training is teaching a model. It requires processing massive datasets to build or update the AI. It's computationally intense, happens once or periodically, and doesn't need to be close to end users. Training workloads are why you're seeing massive GPU clusters built in rural areas with cheap power. Latency to users doesn't matter when you're training a model; latency between servers does. That's what drives the density of these facilities.

Inference is using a trained model. It’s the input and output of every ChatGPT response, every AI-generated image, every autonomous vehicle decision. It happens millions of times per day across every deployed AI application, and it's latency-sensitive. When a customer service chatbot needs to respond in real time, the compute can't be 500 miles away.

The Rise of Edge AI Deployment

Right now, LLM training workloads dominate AI data center buildout. That's the current wave. But the next wave is inference—and inference changes the infrastructure equation.

Inference and agentic AI workloads need edge deployments, typically within 100 miles of major metro areas. As AI applications proliferate, inference demand is growing faster than training demand. Agentic AI—systems that take actions continuously rather than just responding to prompts—compounds this further.

Matching Your Site to the Market

Your mining site's location and characteristics may align better with one workload type than the other. A remote site with abundant cheap power could be well-positioned for training. A site closer to population centers might have an inference opportunity as edge deployment demand grows.

Site evaluation should account for where the market is heading, not just where it is today. Training workloads will continue growing, but inference is where the growth curve is steepest.

The Timing Question

The question isn't whether miners should ever consider AI/HPC. The market opportunity is real, the capital is available, and mining operations have genuine strategic advantages—particularly in power assets and infrastructure experience.

Should You Wait or Move Now?

If you're still evaluating whether this transition makes sense for your operation, take the time to do it right. Three to six months in the planning and assessment phase is reasonable for a decision of this magnitude. Rushing into "we need to do AI" without understanding the infrastructure requirements, operational complexity, and go-to-market challenges is how transitions fail.

The Coordination Starts Earlier Than You Think

If you've already decided to move forward, understand that procurement and deployment timelines mean the coordination should have started months ago. The longest lead time items—colocation buildouts, fiber connectivity, hardware procurement amid component shortages—are consistently underestimated. If you're targeting a specific go-live date, work backward from that date and add buffer.

The hard part isn't buying hardware. It's coordinating everything around that hardware so it can actually be deployed, operated, and monetized.

What Happens If You Wait Too Long?

The competitive advantage of repurposing mining sites will diminish as purpose-built AI/HPC facilities come online. Miners who move thoughtfully but decisively will be better positioned than those who rush in unprepared or wait too long.

Understanding what the transition actually requires is the first step.

Hashrate Index Newsletter

Join the newsletter to receive the latest updates in your inbox.